Last weekend, Thomas, Jon, Paul and I teamed up to participate in the very first Clojure Cup.

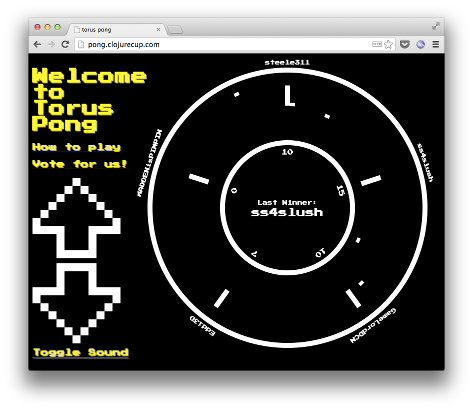

It was great fun and resulted in Torus Pong, a multi-player take on the classic game of Pong (with a twist). You can check it out at pong.clojurecup.com.

If you feel so inclined, please give it a vote here.

The source code is available under the uSwitch GitHub organization at: https://github.com/uswitch/torus-pong

Thomas has written a great post about the process leading up to the “finished” game, the current status, some lessons learned and things we didn’t have time for (lots!).

In this post, I thought I’d share some more techincal information around the implementation, in particular how we leveraged channels and processes provided by core.async to help us decouple different components and handle asynchrony and communication. Hopefully, it can provide an interesting example of an application using core.async. Saying that, all code was written in 48hrs so treat it accordingly.

Most code examples shown have been simplified to illustrate a point, and may not be exactly as they are in the game source code.

The Game

In essence, our idea was to allow multiple players to play a game of Pong together. Each player would gets his own paddle and an even share of the whole playing field field, but with a different opponent on each side.

The last playing field would be connected to the first, forming a circular list of playing fields, or, with a bit of imagination, a torus.

The Problem(s)

As a team, we are all quite green when it comes to game development. This means I’m probably missing a lot of terminology and well-known solutions to typical game programming problems, so bare with me.

During our design discussions in between work on thursday and friday, a couple of distinct problems emerged. I would say the three most important ones were:

- game core: how does the game actually work and how do we implement its rules?

- communication: how do handle communication between players and server?

- rendering: what should the game look like to the user?

I think one reason we were able to complete anything at all was finding these parts, understanding their interactions and then pulling them apart as much as possible, enabling parallel design, implementation and change.

Game Core

I won’t spend any time on the game rules and their implementation, but we’ll look brifefly at the interface to the core game logic.

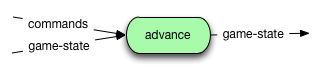

This interface is a single pure function that given a game-state

and some commands from players, produces a new game-state. This

function is called each time the game should advance and is

responsible for enforcing the rules of the game, physics, scoring etc.

The implementation ended up looking something like this:

(defn advance

"Given a game-state and some commands, advance the game-state one iteration"

[game-state commands]

(-> game-state

(apply-scoring)

(handle-commands commands)

(check-for-winner)

(advance-fields)

(play-sounds)

(update-ball-fields)))Engine

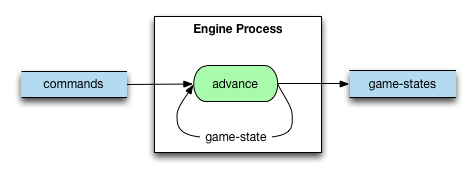

Given the core game function, we needed something that was responsible

for feeding it with commands, and applying it to the current

game-state when appropriate. We called this part the engine, and

this is where core.async enters the picture.

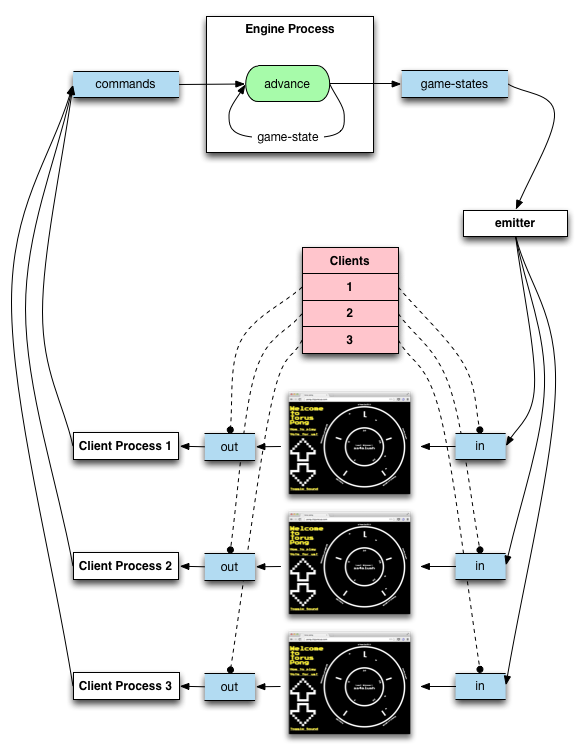

We modeled the engine as a process that:

- takes and accumulates commands from a command channel

- advances the game at some interval, determined by a timeout expiring

- puts each new game-state to a game-state channel

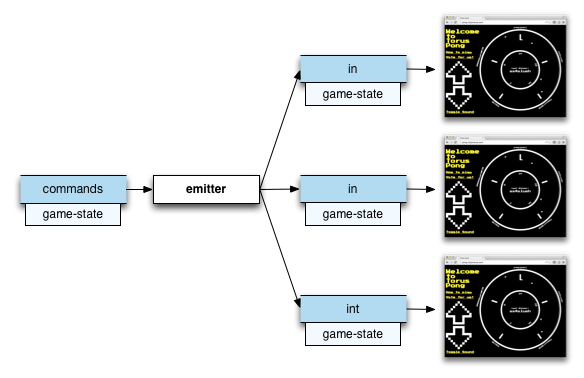

Visually, this looks like this (blue boxes are channels):

The implementation uses alts! to wait for either a command to appear

or the timer to elapse, taking the appropriate action in each case.

(defn engine-process

[command-chan game-state-chan]

(go (loop [game-state game-core/initial-game-state

commands []

timer (timeout params/tick-ms)]

(let [[v c] (alts! [timer command-chan] :priority true)]

(condp = c

command-chan (when v

(recur game-state (conj commands v) timer))

timer (let [new-game-state (game-core/advance game-state commands)]

(>! game-state-chan updated-game-state)

(recur new-game-state [] (timeout params/tick-ms))))))))Talking to Clients

Being a browser based game, we chose WebSockets for client/server communication. Kevin Lynagh has released an excellent library called jetty7-websockets-async which bridges the gap between the jetty7 WebSocket implementation and core.async in a very elegant way.

The library works as follows:

- you hand it a channel on which you want new WebSocket connections to be put as clients connect

- you can then take new WebSocket connections from this channel, where

each connection is represented by a channel pair:

in: on which you can put messages that should be sent to clientout: from which you can take messages received from the client

The Connection Process

We have a singleton process that accepts new connections from a connection channel, and then spawns a separate process to handle further communication with the client. A slightly simplified version of the code that does this is as follows:

(defn connection-process

[conn-chan command-chan]

(go (loop [conn (<! conn-chan)]

(when conn

(let [{:keys [in out]} conn

id (next-id!)]

(client-process id in out command-chan)

(recur (<! conn-chan)))))))We read from the connection channel. When we get a new connection we

pull out the in and out channels, generate a new unique id for the

client and spawn a client process, passing it the id, the in and

out channels as well as the shared command-chan.

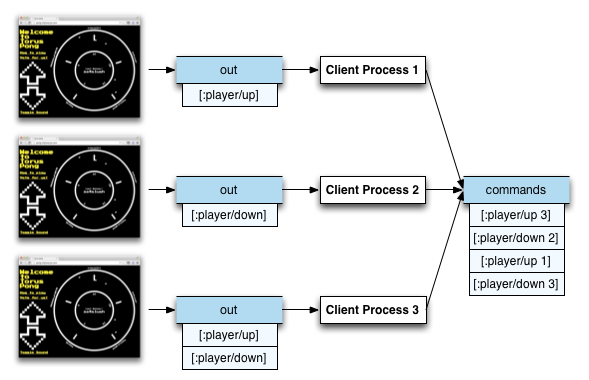

The Client Process

The main responsibility of the client process is to forward commands

from the out channel of the client to the command channel consumed

by the engine, decorating them with a player id:

The implementation:

(defn client-process

[id in out command-chan]

(go

(>! command-chan [:player/join id])

(loop [msg (<! out)]

(when msg

(let [command (clojure.edn/read-string msg)]

(>! command-chan (conj command id))

(recur (<! out)))))

(>! command-chan [:player/leave id])))The code works as follows:

- notify engine that a new player has joined by sending a

[:player/join id]command tocommand-chan - repeatedly read messages from WebSocket

outchannel, parse them as EDN, tack on the client id and forward to engine - when

nilis read fromout, meaning the connection has been closed, notify game engine that the player has left by sending a[:player/leave idcommand, and exit the process.

Propagating Change

So far we can accept new players, feed their commands to the game engine and advance the game.

The final part is propagating information about new game states to clients. This is handeled by a process we called the game-state emitter.

This process consumes new game-state data put on the game-state

channel by the engine process. It then broadcasts this data in some

form to connected clients.

This process needs to know which channel it should use to talk to a

specific client. This information is conveyed by an atom with

mappings from player ids to in channels. This mapping is

maintained by the connection and client processes described above, but

I omitted them in this post to simplify matters.

Our implementation ended up always sending the entire game state to all players in the game.

(defn game-state-emitter

[game-state-chan clients-atom]

(go (loop [game-state (<! game-state-chan)]

(when game-state

(doseq [[player-id client-chan] @clients-atom]

(>! client-chan (pr-str game-state)))

(recur (<! game-state-chan))))))All Together Now

Putting channels, processes and shared state together, we get something like this:

The angry pink box is of course the shared state, ie. the atom

described above. We could have eliminated this by introducing a bit

more communication, but we didn’t have time to.

Once you get past the core.async concepts, the architecture is really very simple, and worked out quite well for us.

A Word on Buffering

One thing I really like about core.async is the way it forces you to think about contention in a system of communicating processes. By default, all channels are unbuffered and blocking1, meaning that if you try to put something on a channel, and nobody is there to take it, you will block until someone arrives on the other end (and vice versa).

This is a great default, but it’s also simple to introduce different buffering policies when needed. We didn’t have a lot of time to tune this, but there are definitely places we should improve.

One example is the game-state channel:

When the engine has produced a new game-state, it wants to continue

to accumulate new commands and prepare for the next iteration. If the

emitter is busy transmitting a previous game to all clients, it won’t

pickup the new game-state until its ready. This would block the

progress of the engine, which is bad.

We could improve this by using a sliding-buffer of size 1 for the

game-state channel. In that case, each new game-state put to the

channel would just replace the previous, older one. The engine could

continue immediately, and the emitter, when ready, would pick up the

latest game-state available. It doesn’t care about older

game-states it didn’t have time to distribute.

As the code currently stand, the impact of this issue is reduced by

the fact that we’re wrapping each client channel in a

sliding-buffer.

It think there are lots of interesting things to explore in this area.

Summary

I’ve tried to explain the way we used core.async in our architecture.

There are lots of things I didn’t mention, like:

- how we used it on the client side

- how we halfway through the competition changed the entire structure of the game, but only had to introduce one more simple process

There are, of course, also lots of things we didn’t have time to do.

One thing in particular, that I’d really like to do at some point is implement interpolation, input prediction and lag compensation as described in Source Multiplayer Networking. The game play currently requires very low latencies, and I’m almost amazed it works as well as it does, considering the way input, communication and rendering is currently coupled.

If you read this far, played the game and maybe even liked it, please don’t forget to vote.

blocking an actual JVM thread. A great discussion on this, and core.async in general can be found on episode 35 of the Think Relevance podcast.

-

blocking in core.async is not necessarily the same as ↩

Comments

Comments powered by Disqus